Current Projects

- HFSP Deciphering the Impact of Viral Infections on Human Neurocognitive Functions ex vivo (DeVINE)

- NUMEV Analysis of Inter-personnel information transfer during assisted walking (ASSIST -W)

- EU RIA Artificial Intelligence for Connected Cooperative Automated Mobility (AI4CCAM)

- ANR Connecting an In-vitro mini-Brain to a Robot with a novel Bio-engineering platform (CIBORG)

- ANR MRSEI MAchines with in-Vitro human bRaIn Cells for biological AI (MAVeRIC-AI)

- CEFIPRA Human Guided Impedance Control of Cobotic Arm (HICOBOT)

Recent Projects

- JST ERATO Inami Jizai Body Project

- Decoding human intentions using prediction errors

- Tool Cocgnition in Robots

- Prediction induced motor contagions in humans

- Optimizing human perceptions during robot interactions

- Neuroscience of human physical interactions

- Anxiety induced motor deteriorations

Japan Science and Technology

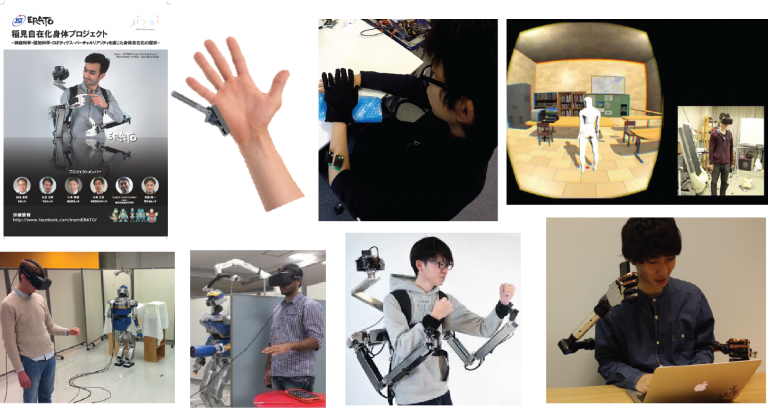

ERATO ‘Inami Jizai Body Project’

he ERATO project is one of the most prestigious funding program in Japan given for the development of new technologies and scientific fields that are viewed as crucial for the future. The ERATO Jizai body project is headed by Tokyo University, and comprises of three groups that will investigate issues in engineering, psychology and neuroscience related to the 4th generation human body editing technologies. In this project we aim to understand the neuroscince behind sensory motor ’embodiment’ :

- How do embodiment of limbs/bodies modify the human brain?

- What are the neural and attentional limits to the number of limbs/bodies that can be embodied?

- Whether and how embodiment of limbs/ body aid in its control and learning?

- Development of a computational understanding of which devices are considered ‘comfortable’ by users.

- How does embodiment affect perception, emotion and consequently acceptance of limbs/body, by the user and the society

In engineering, we are interested in:

- Development of new 4th generation body editing technologies.

- Development of an efficient interface between the human user and the embodied device- for reading the user intention, and for feedback of relevant device behaviors.

- Development of intelligence in the devices that would enable them to work without continuous guidance, but without interfering with the user behaviors.

- Development of movement control that is not only safe to the user, but also perceived safe by the user.

The project, which is headed by the University of Tokyo, includes top Universities in Japan including Keio University, Waseda University, Toyohashi Institute of Technology and University of Electro-Communication (UEC) Tokyo.

See more details here.

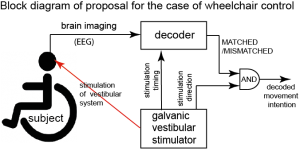

Decoding intentions using prediction errors

The biggest challenge for brain machine interfaces (BMI) is to detect fast in brain signals what movement a human wants to perform. The best performance for (even two class) decoders up till now has not exceeded 70% when movements are not made (only imagined). Even when subjects make movements, accuracy of decoded performance has never exceeded 85% without including the signals related to the movement. In this project, in collaboration with Yasuharu Koike of Tokyo Institute of Technology (TIT) and Hideyuki Ando of Osaka University, we have developed a novel methodology that increases this performance radically, giving performance of ~90% correct intention decoding within 100ms of the subject intention imagination (Science Advances 4 (5) eaaq0183). Specifically, motivated by the motor neuroscience studies (including our own) on the forward models in the brain, we proposed to decode not what movement a user wants/imagines, but whether the movement they want matches (or not) the sensory feedback sent to them (using a subliminal stimulator). This work has led to a patent application by CNRS, AIST and TIT (International patent PCT/FR2016/051813: 2016001348), and a Japanese patent application (特願2020-108549), and was reported in the CNRS magazine. In the past 3 years, we are collaborating with the group of Prof. N Birbaumer in Tuebingen Germany to explore the use this technique for communication with locked in syndrome patients. The first results with an ALS patient was reported in Yoshimura et al. 2021 (Cerebral Cortex tgab046).

Yoshimura, K. Umetsu, A. Tonin, Y. Maruyama, K. Harada, A. Rana, G. Ganesh, U. Chaudhary, Y. Koike, N. Birbaumer (2021). Binary semantic classification using cortical activation with Pavlovian- conditioned vestibular responses in healthy and locked-in individuals, Cerebral Cortex Communications. tgab046, https://doi.org/10.1093/texcom/tgab046

Shi Y, G. Ganesh, H. Ando, Y. Koike, E. Yoshida, N. Yoshimura (2021). Galvanic Vestibular Stimulation-Based Prediction Error Decoding and Channel Optimization. Int J Neural Syst. 2021 Nov;31(11):2150034. doi: 10.1142/S0129065721500349.

Ganesh,K. Nakamura, S. Saetia, AM. Tobar, E. Yoshida, H. Ando, N. Yoshimura, Y. Koike (2018). Utilizing sensory prediction errors for movement intention decoding: a new methodology. Science Advances. 4 (5): eaaq0183.

Nakamura, G. Ganesh, AM Tobar, S. Suetia, N. Yoshimura, E. Yoshida, H. Ando, Y. Koike (2017). Utilizing prediction errors to decode movement intention: a new technique. 17th Winter workshop on the mechanisms of brain and mind, January 2017.

Tool cognition in robots

The human tool use prowess distinguishes us from other animals. For this reason it has hence been a topic of interest in robotics as well. However in robotics, tool use is generally seen as a learning problem. A human, on the other hand, is often able to recognize objects that he/she sees for the first time, as potential tools for a task, and use them without requiring any learning. For the past decade we have been trying to understand how this is possible. We investigated tool use in humans utilizing neuroscientific experiments and mathematical modelling (Nature Communications 5, 4524) and developed a functional characterization of human tools (Proc IEEE ICRA 2018), as well as a functional explanation of the sense of ‘embodiment’ observed in human tool and limb embodiment studies (Neuroscience Research 104: 31-37). In collaboration with Dr. Tee Keng Peng of ASTAR Singapore, we were able to integrate the understanding from these results into a robot control algorithm. Our algorithm enables a robot, that has had no prior experience with tools, to automatically recognize an object (seen for the first time) in its environment as a potential tool for an otherwise unattainable task, as well as use the tool to perform the task thereafter without any learning. We presented the prototype experiments with toy tools in ICRA 2018 (Proc IEEE ICRA 2018) and have now developed a more complete tool cognition framework that enables the robot to use daily life objects as tools, plan the grasp and actions with the tool considering the task motions and obstacles, before finally performing the task with the tool. Furthermore, the framework allows for flexibility in tool use, where the same tool can be adapted for different tasks, and different tools for the same task, all without any prior learning or observation of tool use. We demonstrate the possibilities offered by our tool cognition framework in several robot experiments with both toy and real objects as tools (Tee et al, under review).

Tee, S. Cheong, J. Li, G. Ganesh(2021). A Framework for tool cognition in robots without prior tool learning or observation. Preprint on Hal.

K.P. Tee, J. Li, L.T.P. Chen, K.W. Wan, G. Ganesh (2018).Towards emergence of tool use in robots: automatic tool recognition and use without prior tool learning. IEEE International Conference on Robotics and Automation (ICRA), 2018

Aymerich-Franch, G. Ganesh (2016). The role of functionality in the body model for self-attribution. Invited review in Neuroscience Research. 104: 31-37.

Ganesh, T. Yoshioka, R. Osu, T. Ikegami (2014). Immediate tool incorporation processes determine human motor planning with tools. Nature Communications. 5:4524.

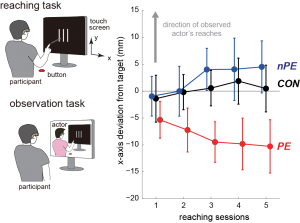

Prediction induced motor contagions in human

Motor contagions refer to implicit effects on one’s actions induced by observed actions. Motor contagions have been traditionally believed to be induced simply by action observation and cause an observer’s action to become similar to the action observed. In contrast, together with Tsuyoshi Ikegami in Japan, we discovered and reported a new motor contagion that is induced only when the observation is accompanied by prediction errors – differences between actions one observes and those he/she predicts or expects. We first discovered the new contagion with an experiment with darts professionals (Scientific Reports 4: 6989) that attracted extensive media coverage in popular press outlets including Scientific American magazine, Yahoo Japan, Asahi Shinbun (Japan), Mainichi shinbun (Japan), as well as CNRS magazine. The study was selected to be displayed as a poster in the Paris underground during the Summer Olympics of 2016. We have now extended this work to explain the computational processes underling this new contagion (ENeuro Jan 13: 4(6)) and are now working with sports scientists to examine other high dynamic sports (eLife 2018; 7: e33392). And we have shown these contagions can be used to improve the efficiency of human–robot interactions (PLOS one 2018). A detailed overview of our findings and how they position related to other motor contagions discoved earlier is provided within the book “Handbook of Embodied Cognition and Sport Psychology” (MIT press, M. Cappuccio Eds).

discovered the new contagion with an experiment with darts professionals (Scientific Reports 4: 6989) that attracted extensive media coverage in popular press outlets including Scientific American magazine, Yahoo Japan, Asahi Shinbun (Japan), Mainichi shinbun (Japan), as well as CNRS magazine. The study was selected to be displayed as a poster in the Paris underground during the Summer Olympics of 2016. We have now extended this work to explain the computational processes underling this new contagion (ENeuro Jan 13: 4(6)) and are now working with sports scientists to examine other high dynamic sports (eLife 2018; 7: e33392). And we have shown these contagions can be used to improve the efficiency of human–robot interactions (PLOS one 2018). A detailed overview of our findings and how they position related to other motor contagions discoved earlier is provided within the book “Handbook of Embodied Cognition and Sport Psychology” (MIT press, M. Cappuccio Eds).

Ikegami, H. Nakamoto, G. Ganesh. Action imitative and Prediction-error induced contagions in human actions. In Handbook of Embodied Cognition and Sport Psychology. ML Cappucio Eds, MIT Press, 381-412.

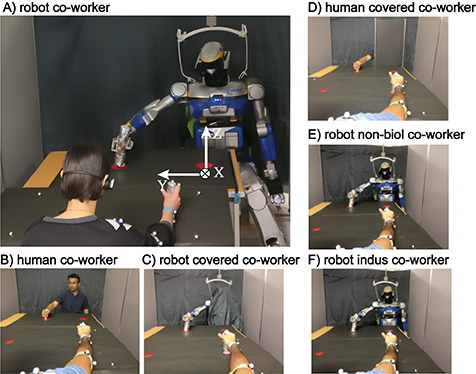

Vasalya, G. Ganesh, A. Kheddar (2018). More than just co-workers: Presence of humanoid robot co-worker influences human performance. PLoS ONE. 13(11): e0206698.

Ikegami*, G. Ganesh*, T. Takeuchi, H. Nakamoto (2018). Prediction error induced motor contagions in human behaviors. Elife.7. 10.7554/eLife.33392.

Ikegami, G. Ganesh(2017). Shared Mechanisms in the Estimation of Self-Generated Actions and the Prediction of Other’s Actions by Humans. eNeuro. 4 (6) ENEURO.0341-17.2017.

Sport : être mauvais est-il contagieux? By Martin Kopp on T. Ikegami, G. Ganesh (2014) in the CNRS le Journal, online CNRS magazine.

Ikegami, G. Ganesh (2014). Watching novice action degrades expert’s performance- Evidence that the motor system is involved in action understanding by humans. Scientific Reports. 4:6989.

Optimizing human perception during robot interactions

In several sub-projects, we are exploring how to modulate robot behaviors by and understanding of human perception. We have shown that robot can benefit co-workers by their mere presence. We are also trying to achieve a computational understand of the human comfort while working with robots, developing robot interactions that are ‘better’ percieved by humans.

D. Héraïz−Bekkis, G. Ganesh, E. Yoshida, N. Yamanobe (2020). Robot Movement Uncertainty Determines Human Discomfort in Co−worker Scenarios. IEEE 6th International Conference on Control, Automation and Robotics (ICCAR), Singapore. pp.59−66.

Vasalya, G. Ganesh, A. Kheddar (2018). More than just co-workers: Presence of humanoid robot co-worker influences human performance. PLoS ONE. 13(11): e0206698.

S Kato, N. Yamanobe, G. Venture, G. Ganesh. The where of handovers by humans: Effect of partner characteristics, distance and visual feedback. PLoS ONE. 14(6): e0217129.

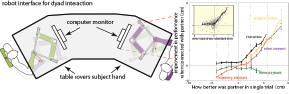

Neuroscience of human physical interactions

From a parent helping to guide their child during their first steps to a therapist supporting a patient, physical assistance enabled by haptic interaction is a fundamental modus for improving motor abilities in humans. Understanding human control behavior during interactions is crucial for the development of robots for physical assistance. However, individual motor control has been extensively studied in literature, the examination of physical interactions has however been scarce, due to the technical difficulties associated with the observation and analysis of the key determinant of physical interaction: haptic feedback. With Etienne Burdet and Atsushi Takagi of Imperial College London, we overcame these difficulties by developing a new paradigm using a novel dual robotic interface that enabled us to examine the behaviors of two individuals when their hands are physically connected. We revealed that physical interaction is consistently beneficial to the partners – in particular, we could show that one improves with a better and even with a worse partner (Scientific Reports 4: 3824), which suggests advantages of interactive paradigms for sport-training and physical rehabilitation. Furthermore, we also clarified the computational processes underlying these results, showing that haptic information provided by touch and proprioception enables one to estimate the partners movement plan and use it to improve one’s own motor performance. We experimentally verified our model by using a Turing test for physical interaction- by embodying our model as a controller in a robot partner, and checking that it induces the same improvements in a human individual as interacting with a human partner (Nature Human Behaviour 1: 45). These results generated interest in press outlets in Japan and London. They were also published in the CNRS magazine and have led to a patent (Japanese patent 特願2011-027711). The results provide important new insights into the neural mechanism of physical interactions in humans, how the exchange of haptic information is modulated by the interaction mechanics (PLoS Computational Biology 14(3): e1005971), and promise collaborative robot systems with human-like assistance. Recently, using Granger analysis, we were also able to isolate, for the first time, specific characteristics of haptic information exchange between humans and show that information exchange occurs within a specific frequency range of around 3 to 6 Hz (Colomer et al. under review).

Colomer, M. Dhamala, G. Ganesh, J. Lagarde (2022). Interacting humans use forces in specific frequencies to exchange information by touch. Preprint on HAL.

Takagi*, G. Ganesh*,T. Yoshioka, M. Kawato, E. Burdet (2017). Physically interacting individuals estimate the partner’s goal to enhance their movements. Nature Human Behaviour. 1:0054

Takagi, F. Usai, G. Ganesh, V. Sanguineti, E. Burdet (2018). Haptic communication between humans is tuned by the hard or soft mechanics of interaction. PLOS Computational Biology. 14(3):e1005971.

Ganesh, A. Takagi, R. Osu, T. Yoshioka, M. Kawato, E. Burdet (2014). Two is better than one: Physical interactions improve motor performance in humans. Nature Scientific reports. 4:3824.

Melendez-Calderon, V. Komisar, G. Ganesh, E. Burdet (2011). Classification of strategies for disturbance attenuation in human-human collaborative tasks. IEEE Engineering in Medicine and Biology Conference (EMBC), 2011.

Neuroscience of anxiety induced motor deterioration in humans

Performance anxiety can profoundly affect performance, even by experts such as professional athletes and musicians. In collaboration with Dr. Masahiko Haruno of CINET and Dr. Takehiro Minamoto of Shimane University we are utilizing behavioral studies, fMRI and TMS to understand why human motor performance deteriorates in the presence of anxiety.

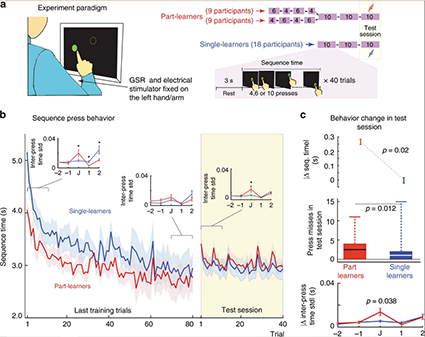

Previously, the neural mechanisms underlying anxiety-induced performance deterioration have predominantly been investigated for individual one-shot actions. Sports and music, however, are characterized by action sequences, where many individual actions are assembled to develop a performance. In this study we show that performance at the junctions between pre-learnt action sequences is particularly prone to anxiety. We identify the activation of the dorsal anterior cingulate cortex (dACC) as a cause of these deteriorations and exhibit that the deteriorations can be attenuated with focal TMS to dACC. The first results were published in Nat. Comm. 10:4287. We are now working to develop a computational model of the mechanisms determining human motor deterioration due to anxiety.

G. Ganesh, T. Minamoto, M. Haruno (2019). Activity in dACC causes motor performance deterioration due to anxiety. Nature Communications. 10:4287.